Physicist at the Institute of Nuclear Physics in Kraków interested in magnetism and always ready to explain everything to everyone in simple terms. He is currently working on adding more scientific papers to his collection, accompanied by his son and another baby on its way. A perfectionist with an acute eye for detail, he has a unit converter in his brain and uses it to compare prices at the supermarket. Loves peace and quiet, especially during hiking. See full profile

and Jack Bowater

466 people find this calculator helpful

Table of contents

Welcome to our t-test calculator! Here you can not only easily perform one-sample t-tests, but also two-sample t-tests, as well as paired t-tests.

Do you prefer to find the p-value from t-test, or would you rather find the t-test critical values? Well, this t-test calculator can do both! 😊

What does a t-test tell you? Take a look at the text below, where we explain what actually gets tested when various types of t-tests are performed. Also, we explain when to use t-tests (in particular, whether to use the z-test vs. t-test) and what assumptions your data should satisfy for the results of a t-test to be valid. If you've ever wanted to know how to do a t-test by hand, we provide the necessary t-test formula, as well as tell you how to determine the number of degrees of freedom in a t-test.

A t-test is one of the most popular statistical tests for location, i.e., it deals with the population(s) mean value(s).

There are different types of t-tests that you can perform:

In the next section, we explain when to use which.

Remember that a t-test can only be used for one or two groups. If you need to compare three (or more) means, use the analysis of variance (ANOVA) method.

The t-test is a parametric test, meaning that your data has to fulfill some assumptions:

If your sample doesn't fit these assumptions, you can resort to nonparametric alternatives. Visit our Mann–Whitney U test calculator or the Wilcoxon rank-sum test calculator to learn more. Other possibilities include the Wilcoxon signed-rank test or the sign test.

Your choice of t-test depends on whether you are studying one group or two groups:

This test is sometimes referred to as an independent samples t-test, or an unpaired samples t-test.

So, you've decided which t-test to perform. These next steps will tell you how to calculate the p-value from t-test or its critical values, and then which decision to make about the null hypothesis.

The degrees of freedom are essential, as they determine the distribution followed by your T-score (under the null hypothesis). If there are d degrees of freedom, then the distribution of the test statistics is the t-Student distribution with d degrees of freedom. This distribution has a shape similar to N(0,1) (bell-shaped and symmetric) but has heavier tails. If the number of degrees of freedom is large (>30), which generically happens for large samples, the t-Student distribution is practically indistinguishable from N(0,1).

💡 The t-Student distribution owes its name to William Sealy Gosset, who, in 1908, published his paper on the t-test under the pseudonym "Student". Gosset worked at the famous Guinness Brewery in Dublin, Ireland, and devised the t-test as an economical way to monitor the quality of beer. Cheers! 🍺🍺🍺

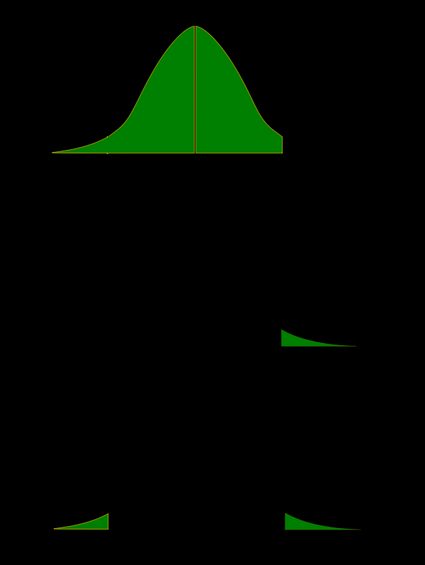

Recall that the p-value is the probability (calculated under the assumption that the null hypothesis is true) that the test statistic will produce values at least as extreme as the T-score produced for your sample. As probabilities correspond to areas under the density function, p-value from t-test can be nicely illustrated with the help of the following pictures:

The following formulae say how to calculate p-value from t-test. By cdft,d we denote the cumulative distribution function of the t-Student distribution with d degrees of freedom:

However, the cdf of the t-distribution is given by a somewhat complicated formula. To find the p-value by hand, you would need to resort to statistical tables, where approximate cdf values are collected, or to specialized statistical software. Fortunately, our t-test calculator determines the p-value from t-test for you in the blink of an eye!

Recall, that in the critical values approach to hypothesis testing, you need to set a significance level, α, before computing the critical values, which in turn give rise to critical regions (a.k.a. rejection regions).

Formulas for critical values employ the quantile function of t-distribution, i.e., the inverse of the cdf:

To decide the fate of the null hypothesis, just check if your T-score lies within the critical region:

One-sample t-test formula:

t = x ˉ − μ 0 s ⋅ n t = \frac - \mu_0> \cdot \sqrt t = s x ˉ − μ 0 ⋅ nNumber of degrees of freedom in t-test (one-sample) = n − 1 n-1 n − 1 .

In particular, if this pre-determined difference is zero ( Δ = 0 \Delta = 0 Δ = 0 ):

Formally, to perform a t-test, we should additionally assume that the variances of the two populations are equal (this assumption is called the homogeneity of variance).

There is a version of a t-test that can be applied without the assumption of homogeneity of variance: it is called a Welch's t-test. For your convenience, we describe both versions.

Use this test if you know that the two populations' variances are the same (or very similar).

Two-sample t-test formula (with equal variances):

t = x ˉ 1 − x ˉ 2 − Δ s p ⋅ 1 n 1 + 1 n 2 t = \frac_1 - \bar_2 - \Delta> +\frac >> t = s p ⋅ n 1 1 + n 2 1

x ˉ 1 − x ˉ 2 − Δ

where s p s_p s p is the so-called pooled standard deviation, which we compute as:

s p = ( n 1 − 1 ) s 1 2 + ( n 2 − 1 ) s 2 2 n 1 + n 2 − 2 s_p = \sqrt> s p = n 1 + n 2 − 2 ( n 1 − 1 ) s 1 2 + ( n 2 − 1 ) s 2 2

Number of degrees of freedom in t-test (two samples, equal variances) = n 1 + n 2 − 2 n_1 + n_2 - 2 n 1 + n 2 − 2 .

Use this test if the variances of your populations are different.

Two-sample Welch's t-test formula if variances are unequal:

t = x ˉ 1 − x ˉ 2 − Δ s 1 2 / n 1 + s 2 2 / n 2 t = \frac_1 - \bar_2 - \Delta>> t = s 1 2 / n 1 + s 2 2 / n 2

x ˉ 1 − x ˉ 2 − Δ

The number of degrees of freedom in a Welch's t-test (two-sample t-test with unequal variances) is very difficult to count. We can approximate it with the help of the following Satterthwaite formula:

( s 1 2 / n 1 + s 2 2 / n 2 ) 2 ( s 1 2 / n 1 ) 2 n 1 − 1 + ( s 2 2 / n 2 ) 2 n 2 − 1 \frac<(s_1^2/n_1 + s_2^2/n_2)^2><\frac<(s_1^2/n_1)^2> + \frac<(s_2^2/n_2)^2> > n 1 − 1 ( s 1 2 / n 1 ) 2 + n 2 − 1 ( s 2 2 / n 2 ) 2 ( s 1 2 / n 1 + s 2 2 / n 2 ) 2

Alternatively, you can take the smaller of n 1 − 1 n_1 - 1 n 1 − 1 and n 2 − 1 n_2 - 1 n 2 − 1 as a conservative estimate for the number of degrees of freedom.

🔎 The Satterthwaite formula for the degrees of freedom can be rewritten as a scaled weighted harmonic mean of the degrees of freedom of the respective samples: n 1 − 1 n_1 - 1 n 1 − 1 and n 2 − 1 n_2 - 1 n 2 − 1 , and the weights are proportional to the standard deviations of the corresponding samples.

As we commonly perform a paired t-test when we have data about the same subjects measured twice (before and after some treatment), let us adopt the convention of referring to the samples as the pre-group and post-group.

Typically, this pre-determined difference is zero. We can then reformulate the hypotheses as follows:

Paired t-test formula

In fact, a paired t-test is technically the same as a one-sample t-test! Let us see why it is so. Let x 1 , . . . , x n x_1, . , x_n x 1 , . , x n be the pre observations and y 1 , . . . , y n y_1, . , y_n y 1 , . , y n the respective post observations. That is, x i , y i x_i, y_i x i , y i are the before and after measurements of the i -th subject.

For each subject, compute the difference, d i : = x i − y i d_i := x_i - y_i d i := x i − y i . All that happens next is just a one-sample t-test performed on the sample of differences d 1 , . . . , d n d_1, . , d_n d 1 , . , d n . Take a look at the formula for the T-score:

t = x ˉ − Δ s ⋅ n t = \frac - \Delta>\cdot \sqrt t = s x ˉ − Δ ⋅ nNumber of degrees of freedom in t-test (paired): n − 1 n - 1 n − 1

We use a Z-test when we want to test the population mean of a normally distributed dataset, which has a known population variance. If the number of degrees of freedom is large, then the t-Student distribution is very close to N(0,1).

Hence, if there are many data points (at least 30), you may swap a t-test for a Z-test, and the results will be almost identical. However, for small samples with unknown variance, remember to use the t-test because, in such cases, the t-Student distribution differs significantly from the N(0,1)!

🙋 Have you concluded you need to perform the z-test? Head straight to our z-test calculator!

A t-test is a widely used statistical test that analyzes the means of one or two groups of data. For instance, a t-test is performed on medical data to determine whether a new drug really helps.

Different types of t-tests are:

To find the t-value: